2 Hadoop

BootcampBigdata2020-12-17

Hadoop

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage.

- Java

- Open Source

- designed on commodity hardware

- Master-slave design

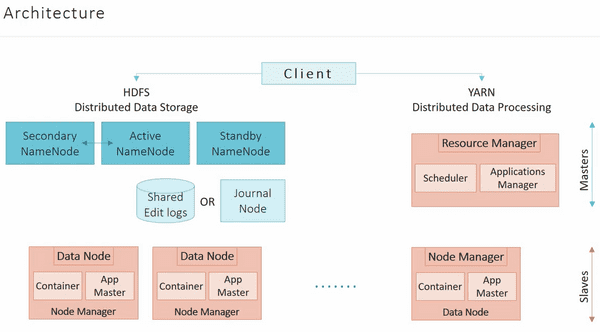

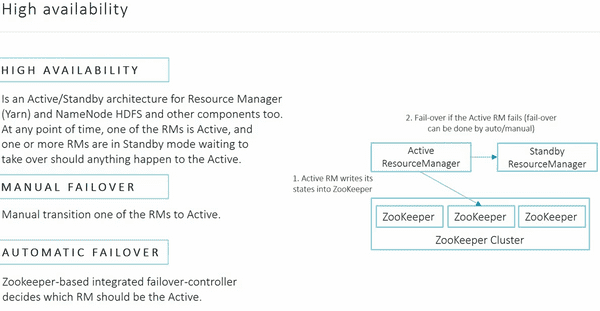

- Which node is responsible for High-Availability in Hadoop: Standby Node

- Secondary NameNode: Secondary NameNode in hadoop is a specially dedicated node in HDFS cluster whose

main functionis to takecheckpointsof the file system metadata present on namenode. It is not a backup namenode. It just checkpoints namenode’s file system namespace.

5 V’s: variety, volume, velocity,

Pros

- Scalability

- Flxibility

- Fault-tolerance

- Computing power

Cons

- Security concerns

- Overkill for small data

- Potential stability issues

- High costs for infrastructure

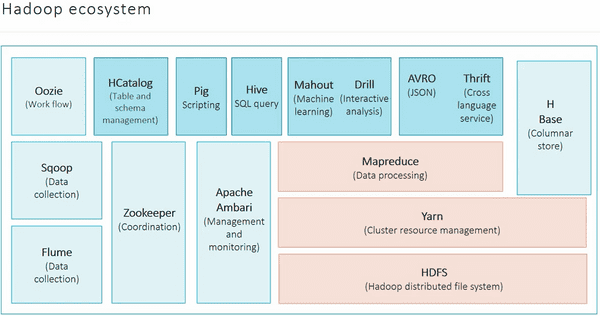

Ecosystem

- Oozie: Work flow

- HCatalog: Table and schema management

- Pig: Scripting

-

Hive: SQL query, S3, hdfs

Apache Hive is a data warehouse software project built on top of Apache Hadoop for providing data query and analysis. Hive gives a SQL-like interface to query data stored in various databases and file systems that integrate with Hadoop.

- Mahout: Machine learning

- Drill: Interactive analysis

- AVRO: JSON

- Thrift: Cross Lanaguage Service

- HBase: Columnar store

- Sqoop: Data collection

- Flume: Data collection

- Zookeeper: Coordination

- Apache Ambari: Management and monitoring

- Mapreduce: Data Processing (batch processing: Spark)

- Yarn: Cluster resource management

- HDFS: Hadoop distibuted file system

What do Pig, Hive and Spark have in common? They’re programming alternatives to MapReduce

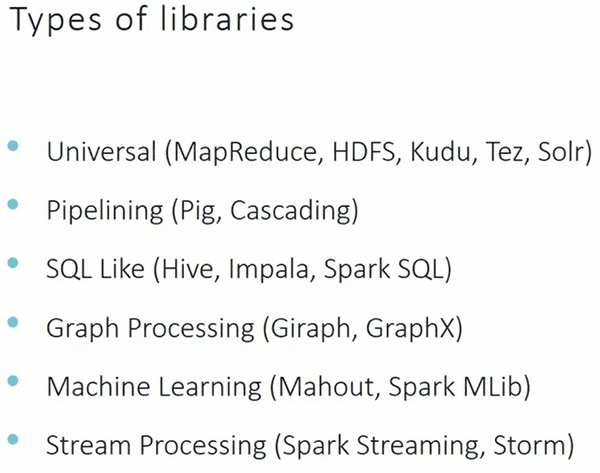

Types of libraries

- Universal: MapReduce, HDFS, Kudu, Tez, Solr

- Pipelining: Pig, Cascading

- SQL Like: Hive, impala, Spark SQL

- Graph Processing: Giraph, GraphX

- Machine Learning: Mahout, Spark MLib

- Stream Processing: Spark Streaming, Storm

Hadoop 3.0 includes docker containers.

Running Options

- Open Source: hadoop - rarely used.

-

pre-built Enterprise-ready distribution

- cloudera

- Hortonworks

- MAPR

-

Cloud-managed cluster

- Amazon EMR

- HDInsight

- AWS, Google and Azure have spot instances (can greatly reduce costs)

Most Hadoop vendors provide pre-configured images in popular cloud provider marketplaces (AWS, AZure, GCP)

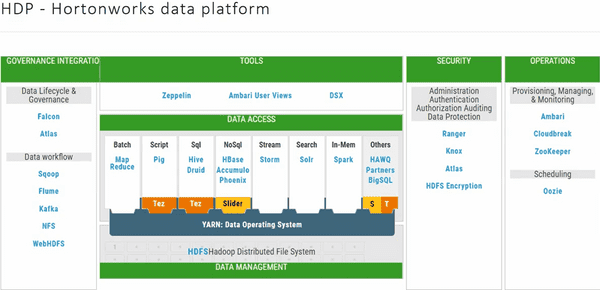

HDP - Hortonworks data platform

- Governance Integration:

Data workflow: Sqoop, Flume, Kafka, NFS, WebHDFS

- Tools: Zeppelin, AmbariUser Views, DSX

Data Access

- Security: Ranger, Knox, Atlas, HDFS Encription

- Operations: Ambari, Cloudbreak, ZooKeeper, Scheduling, Oozie

Data Access:

- Batch: MapReduce

- Script: Pig

- Sql: Hive, Druid

- NoSql: HBase

- Stream: Storm

- Search: Solr

- In-Memory: Spark

- Others: BigSQL

YARN:

- Masters

- Slaves

Ambari:

- Install putty to be able to SSH into HDP Sandbox

- Open Putty

- Set hostname 127.0.0.1

- Set port 2222

- Connect, credentials root/Hadoop

- You will be prompted to change root password

- Then type ambari-admin-password-reset

- Type ambari-agent restart